Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

We remember emotional moments more clearly than everyday details primarily due to the role of emotions in enhancing memory encoding, consolidation, and retrieval. Research indicates that emotionally charged experiences activate brain regions such as the amygdala, which then facilitate the encoding of memories in the hippocampus, making emotional memories more vivid and easier to recall than neutral ones[6].

The process is influenced by two key dimensions of emotion: arousal and valence. Arousal tends to heighten attention towards significant stimuli, allowing key details to be stored more effectively while peripheral details may be neglected[3]. For instance, during emotional events, individuals focus intently on central elements, such as an accident scene, often forgetting surrounding details like the background or context[4].

Moreover, the emotional state at the time of an event can affect the likelihood of recalling specific details later, leading to trade-offs between memory for significant and peripheral information[5]. This results in a central/peripheral memory trade-off; we may have strong memories of the emotional event itself, but less clarity about surrounding details. Therefore, while emotions enhance memory for critical elements, they can diminish our ability to recall a full spectrum of contextual information[2].

In summary, strong emotions sharpen our focus on key details, making those memories more durable but often at the expense of surrounding contextual cues, leading to a more selective memory of emotional events.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Get more accurate answers with Super Pandi, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

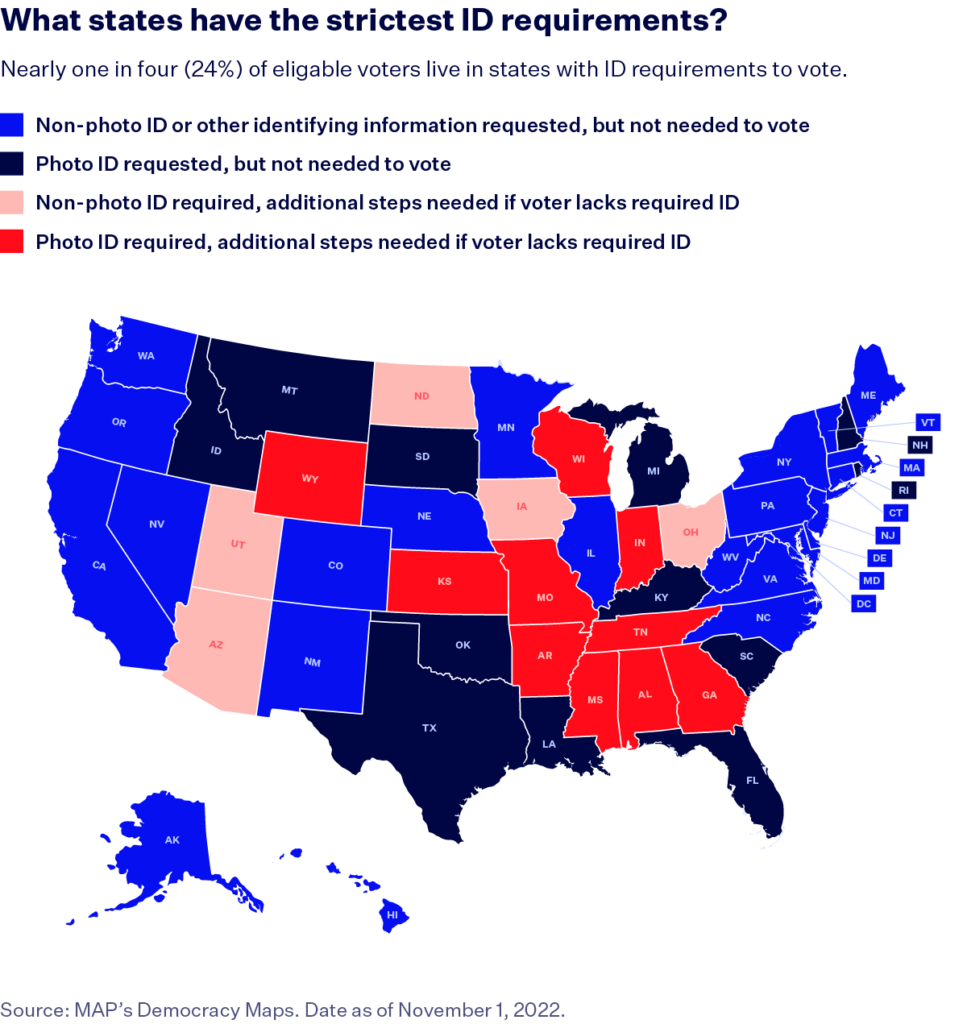

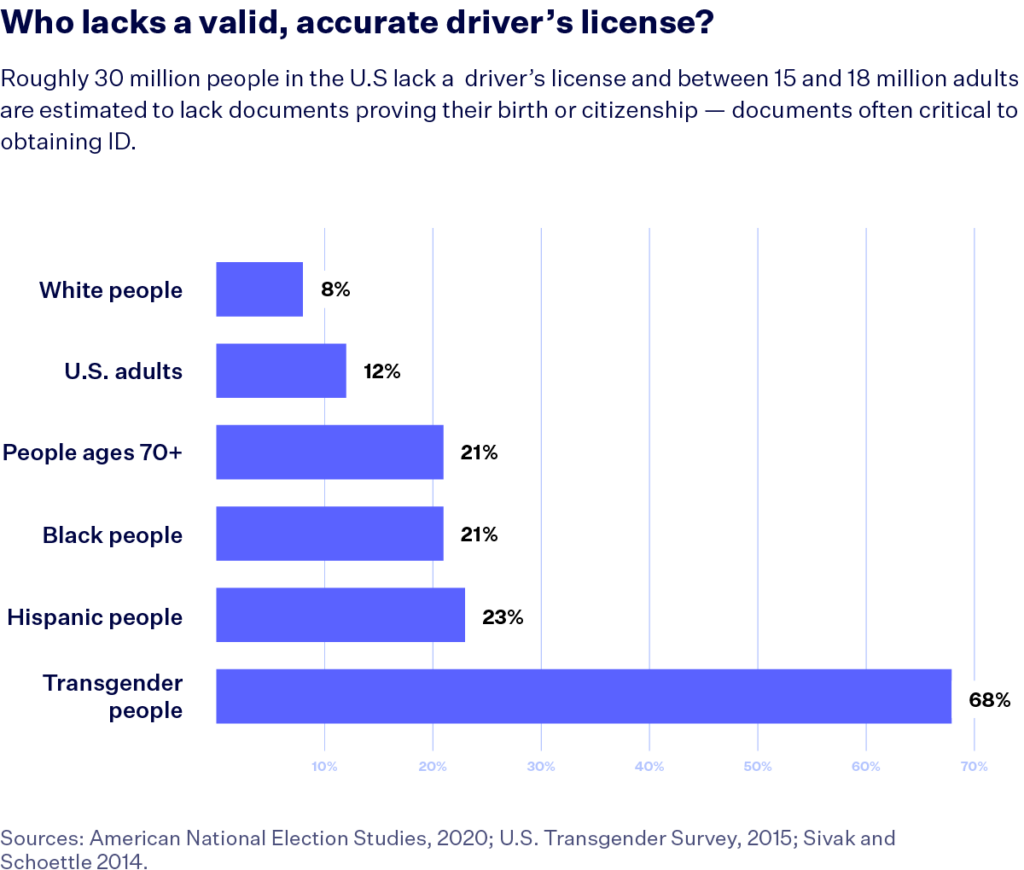

Voter ID laws have emerged as a contentious issue within the electoral landscape both in the United States and the United Kingdom, significantly affecting voter access and participation. While proponents argue that such measures enhance electoral integrity, numerous studies and reports suggest that they disproportionately disenfranchise vulnerable populations.

Barriers to Accessing Voter ID

The Movement Advancement Project elucidates considerable barriers to obtaining ID in the U.S. that transcend mere inconvenience. Factors such as the necessity for multiple forms of additional documentation, financial burdens, and service availability pose substantial obstacles to many individuals. About 15 to 18 million people in the U.S. lack access to essential documents that prove their birth or citizenship, crucial for acquiring identification[1]. The financial implications are dire, particularly for marginalized groups; for example, one-third of transgender individuals reported spending over $250 on name changes to match their gender identity, with many unable to afford these costs[1].

In the UK, the situation reflects similar concerns following the introduction of voter ID requirements. Data from the May 2023 local elections revealed that approximately 14,000 individuals were turned away for lacking the necessary photo ID, with the proportion higher among ethnic minorities and unemployed voters[6]. The Electoral Commission acknowledged that this figure likely underestimated the actual number of disenfranchised voters since many potential voters may have turned away upon learning about ID requirements[6].

Impact on Marginalized Communities

Voter ID laws exacerbate existing inequalities, disproportionately impacting communities of color and low-income individuals. The Brennan Center highlights a significant racial turnout gap following the implementation of strict voter ID laws in various states, with research indicating that these measures hinder Black and Latino voters more acutely than their white counterparts[3]. In North Carolina, studies revealed that the enactment of such laws reduced turnout even after the laws were repealed, indicating a lingering effect on voter behavior[3].

In the UK, similar concerns have been raised regarding young voters and those without stable economic standing. The Good Law Project criticized the Elections Act 2022, asserting that the list of acceptable IDs fails to represent younger citizens effectively, thus creating barriers specific to this demographic[5]. As youth tend to favor progressive candidates, disenfranchising them poses a political risk for the ruling government and raises moral questions about fairness in the electoral process[5].

Voter Turnout and Engagement

The evidence indicating the adverse effects of voter ID laws on turnout is compelling. A significant body of research correlates strict ID requirements with decreased voter participation rates among marginalized communities. In Texas, voters of color were found to be disproportionately barred from voting due to ID requirements, suggesting that these laws are not merely procedural but serve as structural barriers to engagement[3]. The broad consensus among studies indicates that while some argue voter ID laws have a minimal impact on overall turnout, they clearly hinder access for vulnerable groups, making participation in the electoral process more difficult for them[3].

In the UK, despite widespread support for voter ID laws—approximately two-thirds of Britons support them—there remains a palpable concern about the detrimental impacts on turnout, especially for groups already facing challenges in accessing the electoral process[2][6]. Awareness of the new rules is high; however, significant segments of the populace, particularly among younger demographics, remain uninformed about these requirements, thus further complicating their ability to vote[2].

Legal and Political Ramifications

The political ramifications of implementing voter ID laws are profound. Critics argue that they serve as tools for disenfranchisement rather than measures of integrity. For instance, in the U.S., Republicans have faced accusations of exploiting voter ID laws for electoral advantage, as evidenced by strict regulations that often target demographic groups less likely to possess the required IDs[1].

In the UK, the introduction of voter ID has faced legal challenges based on claims of unlawful disenfranchisement impacting individuals with disabilities and other marginalized populations[5]. The discourse surrounding these laws often centers on balancing electoral integrity with ensuring equitable access to voting rights, posing significant questions about democracy's inclusivity and fairness.

Conclusion

The implications of voter ID laws are profound and multifaceted, affecting how segments of the population engage with democracy. While designed with the intention of safeguarding electoral integrity, the resultant disenfranchisement reflects systemic inequities that undermine the very principles of democratic participation. Addressing these issues requires a re-evaluation of ID requirements and a concerted effort to make the electoral process more accessible for all citizens, regardless of socio-economic status or background.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Comedy festivals play a significant role in shaping local culture by enhancing community engagement, boosting economic activity, and facilitating social discourse. This multifaceted impact emerges from various studies and reports, demonstrating the importance of comedy as an art form and its ability to influence societal attitudes.

Community Engagement and Social Connection

One of the primary impacts of comedy festivals is their ability to foster community connections and enhance the quality of life. By providing spaces for people to gather and share experiences through humor, these festivals are instrumental in combatting loneliness and isolation. They not only bring diverse groups together but also create opportunities for individuals to connect over shared interests. As outlined in a program aimed at supporting community-organized festivals, the festivals are designed to 'create more opportunities to bring people together to make connections doing something they enjoy' ([3]). This sense of belonging and community is essential for cultural cohesion.

Economic Impact

Comedy festivals also contribute to the local economy. They often stimulate business for local vendors, hotels, restaurants, and other service providers. The influx of visitors for these festivals generates economic activity that benefits the entire community. Furthermore, investing in local culture through such festivals can attract further investments and opportunities for local artists and creators, thereby enriching the cultural landscape ([4]).

Social Capital Development

The role of comedy festivals in generating social capital is notable. Social capital refers to the networks, relationships, and trust that facilitate cooperation among individuals within a community. Festivals create a 'cluster of events with an overarching theme,' encouraging audience participation and interaction ([4]). This not only improves community bonding but also allows local acts to gain visibility, enhancing their careers while contributing to the overall cultural fabric of the area.

Influencing Attitudes and Perspectives

Comedy has historically played a vital role in addressing and challenging societal issues. As researchers suggest, live performances can illuminate major social topics, including health, race, and disability. The exploration of comedic performances, particularly those by disabled comedians, has shown potential in reshaping public perceptions. Comedy “can fulfill a serious function in society” by allowing performers to engage with issues of prejudice and stereotyping in a relatable way ([5]). Thus, comedy festivals become platforms for social change, encouraging discussions that transcend traditional boundaries.

Bridging Societal Divides

Moreover, comedy festivals can bridge divides within broader cultural contexts. By emphasizing inclusivity and diversity, these festivals offer marginalized voices and perspectives a platform that might not be available in more mainstream venues. They can challenge the status quo and engage audiences in critical discussions, making humor a tool for social reflection and growth.

Perceptions of Art Forms

Despite the significant role comedy plays in local culture, there are still challenges in recognizing it as a legitimate art form equivalent to other creative expressions. Many comedy festivals aim to enhance recognition and respect for comedy in wider cultural contexts. Festivities themed around comedy contribute to a shift in perception, illustrating that it is not merely 'juvenile media or just for kids' ([5]). This increased recognition can lead to more robust public support and funding for future events.

Conclusion

In summary, comedy festivals significantly impact local culture by fostering community ties, stimulating economic activity, and serving as platforms for social discourse. These events enhance social capital while offering a medium that challenges societal norms and recognizes diverse experiences. By celebrating comedy's unique ability to connect people and address essential issues, these festivals not only entertain but also enrich the cultural landscape of their host communities. As comedy continues to gain attention as a meaningful art form, its influence on local culture is likely to expand, fostering even greater community engagement and societal understanding.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Ancient civilizations have significantly shaped modern society through various advancements in culture, technology, governance, and other fields. By understanding and appreciating these contributions, we gain insight into the development and progression of human civilization. This report explores how several ancient civilizations have influenced today's world.

Technological and Agricultural Advancements

Mesopotamia

Mesopotamia, often recognized as the world's first civilization, made numerous contributions that are still relevant today. One of the most notable is the invention of cuneiform writing, which revolutionized communication and record-keeping[3]. Mesopotamians also developed one of the earliest known calendars, structured around lunar phases and effectively used agriculture, influencing modern calendar systems[3]. The plow, another Mesopotamian invention, significantly enhanced agricultural productivity and remains foundational in modern farming[3]. Additionally, Mesopotamians invented the wheel and established the first forms of urban planning and irrigation systems[1][3][5].

Egypt

The ancient Egyptians were pioneers in various fields, particularly in architecture and engineering. Their pyramids and temples exemplify advanced building techniques and a deep knowledge of mathematics[2]. Egyptians also made significant medical advancements, including the development of surgical methods and the use of herbs and drugs, which influence medical practices even today[1][5][7]. They contributed to the field of writing through the creation of hieroglyphics, one of the earliest writing systems, influencing modern alphabets and written communication[2][3][5].

China

Ancient China was a powerhouse of innovation. Chinese inventions such as paper, printing, gunpowder, and the compass are integral parts of modern technology[1][2][3]. The Chinese also made early advancements in silk production, block printing, and acupuncture, which remain relevant in today's textile industry, publishing, and healthcare[1][5][6].

Philosophical and Scientific Contributions

Greece

Ancient Greece is renowned for its contributions to philosophy, democracy, and science. Philosophers like Socrates, Plato, and Aristotle laid the foundational principles of Western philosophy, which shaped modern critical thinking, ethics, and political theory[2][10]. The concept of democracy, pioneered in Athens, forms the basis of many contemporary political systems[4]. Furthermore, Greek advancements in science and mathematics, including the work of Pythagoras and Hippocrates, continue to underpin modern scientific and mathematical thought[1][10].

India

India's ancient civilization contributed profoundly to philosophical thought, mathematics, and medicine. The concept of zero and the decimal system, developed by Indian mathematicians, revolutionized mathematics globally[2][3]. Moreover, Indian philosophical schools such as Vedanta, Buddhism, and Jainism have inspired countless spiritual and philosophical traditions worldwide[1][2]. Practices like yoga and Ayurveda, originating from ancient India, remain popular for their holistic approach to health and wellness[2][3].

Legal and Governance Systems

Rome

The Roman Empire's influence on modern law and governance is vast. The Roman legal system, with its principles of justice and codification of laws, serves as the foundation for many contemporary legal systems, including those of the United States and Europe[2][4]. Roman engineering feats, such as the construction of roads, aqueducts, and the use of concrete, have set standards for modern infrastructure[1][4][8]. The concept of citizenship and civic duty promoted by the Romans is echoed in today's political discourse[4].

Persia

The Persian Empire's centralized administration and extensive trade networks played a crucial role in connecting diverse cultures and economies[2]. Persian art, literature, and architecture have also left a lasting legacy, influencing artistic expressions and cultural exchanges in modern society[2][3].

Contributions to Arts and Culture

Egypt

Ancient Egypt's artistic and architectural achievements have inspired countless artists and architects throughout history. The pyramid form, for instance, continues to influence modern architecture, seen in structures like the Louvre Pyramid in Paris[1][3][7]. Egyptian literature and art provide valuable insights into their culture and beliefs, which continue to captivate contemporary audiences[3][7].

Rome

Roman contributions to art and architecture are profound, with innovations such as the use of arches, domes, and concrete in construction[1][2][4][8]. The Colosseum's design has influenced the structure of modern sports stadiums, and Roman sculpture and frescoes remain admired for their realism and detail[4][8].

Maya and Aztec

The Maya and Aztec civilizations made significant advancements in astronomy, mathematics, and architecture. The Maya calendar, known for its accuracy, has influenced modern timekeeping[1][2]. Their architectural achievements, including the construction of pyramids and cities, display a high level of engineering skill and artistic creativity[1][3].

Conclusion

The contributions of ancient civilizations to modern society are extensive and multifaceted. From technological innovations and agricultural practices to philosophical thought and legal systems, the legacy of these early cultures continues to shape our world. By studying and appreciating these ancient advancements, we gain a deeper understanding of the roots of contemporary society and the enduring impact of human ingenuity and creativity.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Get more accurate answers with Super Pandi, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

- First Human PhotoIn 1838, Paris's bustling Boulevard du Temple turned ghostly: a lone bootblack, frozen by a 10‐min exposure, proves only the motionless are captured . What still moment defines you?

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

Transcript

Imagine a vocoder as a painter combining two signals: one provides the words—the voice modulator—and the other supplies the tone—the synth carrier. The vocoder splits the voice into multiple frequency bands and uses them to shape the synth tone, crafting a striking robotic effect. Hear the transformation in three quick steps: first a dry voice, then a dry synth, and finally the vocoded result where the bands sculpt a new, electrifying sound. For clearer articulation, over-articulate your words so each band captures maximum detail, ensuring your vocoder effect remains crisp and intelligible.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

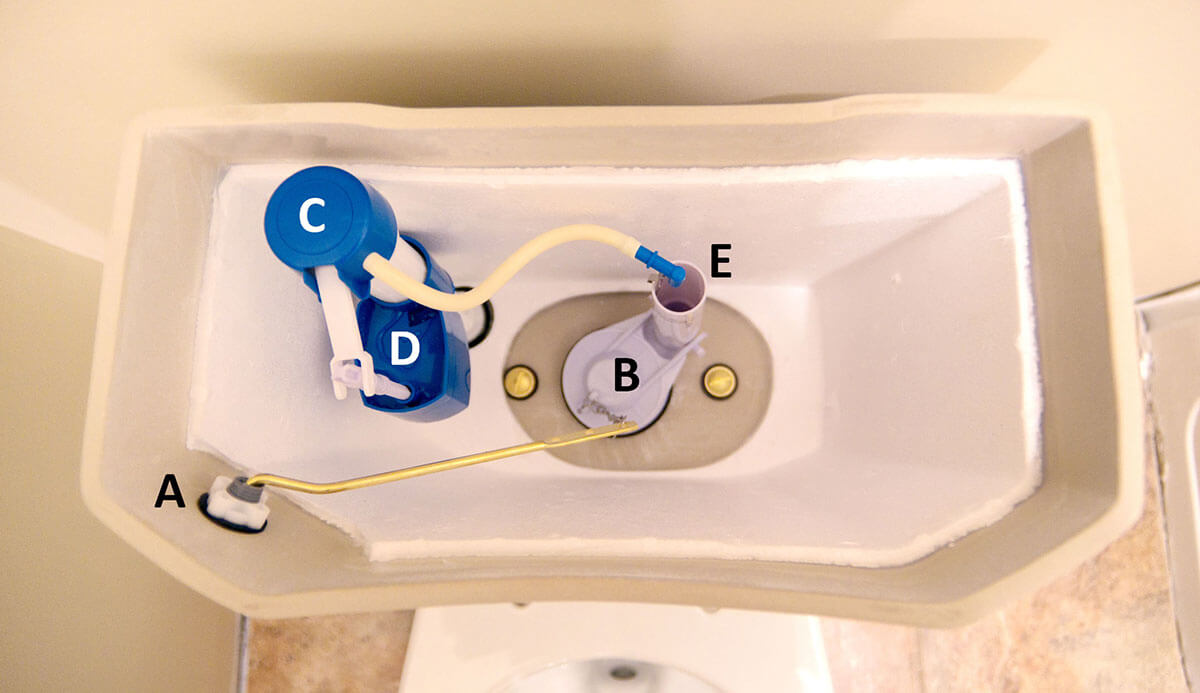

:strip_icc()/bhg-how-to-fix-running-toilet-step-04-0267_3pbsjtfjqEhBksff2NWQ5J-b08fe3c4723d4b29a67a7c8e6af9de50.jpg)

:strip_icc()/bhg-how-to-fix-running-toilet-step-02-0273_7Pr6JFRBK7iBY-aEg3n7Bk-d065d8a4fa604f2c819546e96f03d353.jpg)