Overview of AI Model Marketplaces

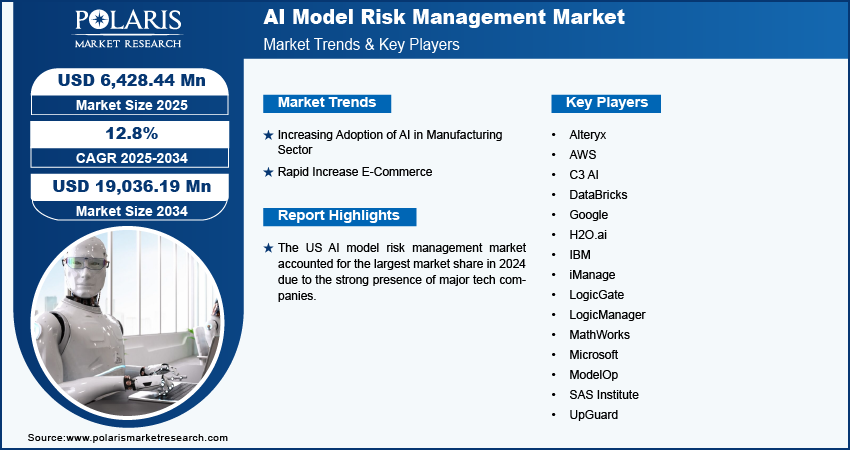

Digital marketplaces for pretrained AI models have emerged as vibrant hubs where models are traded, refined, and repurposed. In these digital bazaars, researchers and developers can access a wide variety of machine learning models and their variations, leading to accelerated innovation and wider dissemination of technical expertise[4]. Such marketplaces not only facilitate access to technical artefacts, but also introduce the need for rigorous quality assurance, clear licensing terms, and a well-defined governance model that supports both open innovation and responsible use[2].

Licensing and Quality Assurance

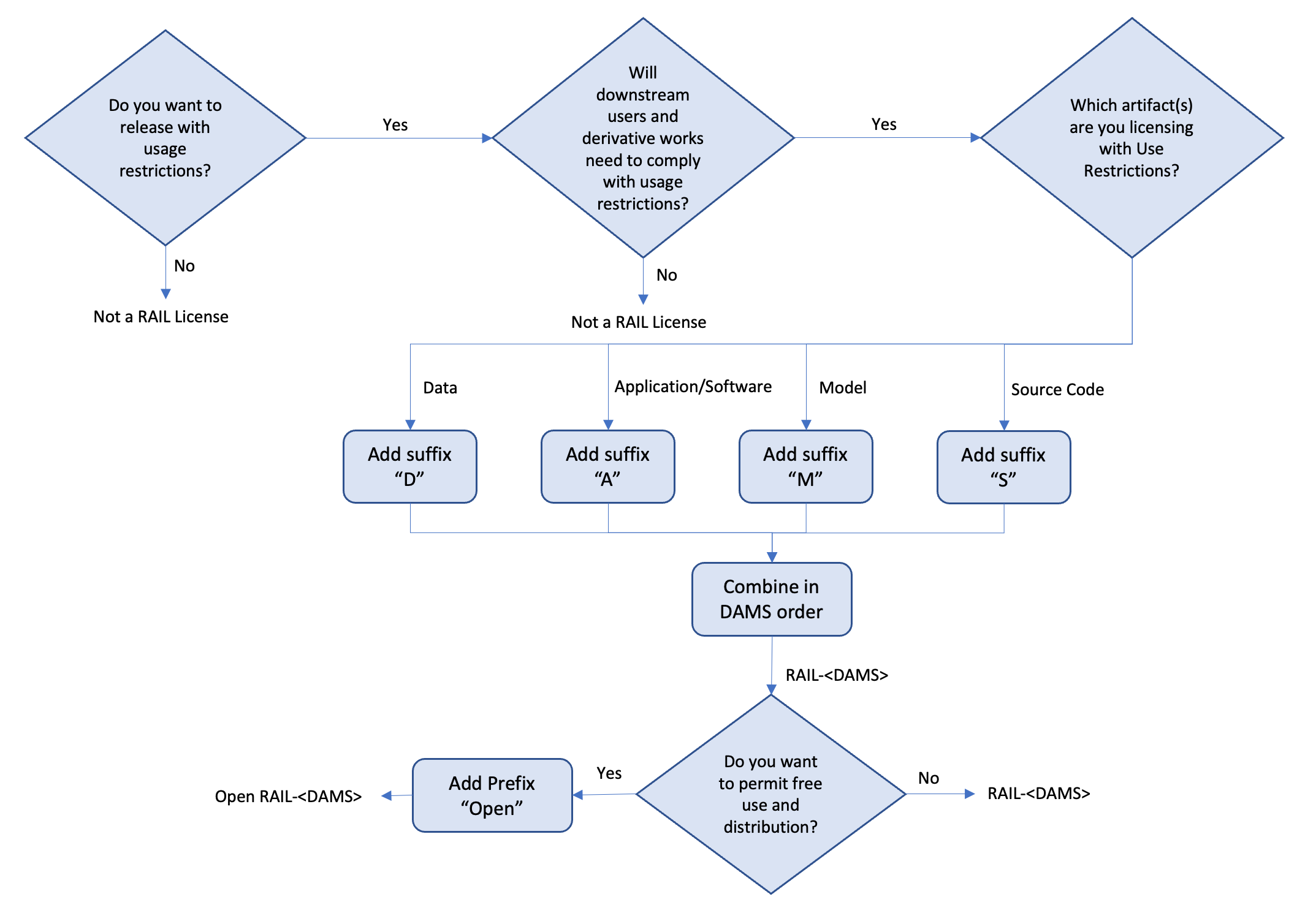

One of the key features of AI model marketplaces is the adoption of specialized licensing schemes that distinguish model weights from traditional source code. Developers and organisations are increasingly licensing models under dedicated frameworks such as Responsible AI Licenses (RAIL) and OpenRAIL licenses, which impose specific use restrictions while allowing downstream users to build derivative works under similar constraints[2]. These licenses explicitly address concerns over quality assurance by incorporating technical limitations, usage guidelines, and safeguards against misuse, thus ensuring that models traded in these marketplaces meet both ethical and functional standards[2]. By clearly stating permitted applications and potential restrictions, licensing not only provides legal clarity but also serves as an assurance mechanism for buyers and users, helping them navigate the complexities of intellectual property and model performance[2].

Regulatory Implications in a Rapidly Evolving Market

The rapid expansion of AI marketplaces has raised significant regulatory challenges, particularly concerning market concentration, liability for harms, and the balance between innovation and public safety. Governments and regulators face a dilemma when attempting to intervene; they must design policies that not only address potential externalities but also avoid stifling the beneficial dynamics of market experimentation[3]. For instance, when innovation carries risks, regulatory measures may include ex ante restrictions such as bans or ex post measures like liability rules, both of which require policymakers to evaluate uncertain future harms while preserving the impetus for breakthrough developments[3]. Moreover, given the inherent uncertainty and rapid evolution of AI technologies, a nimble and adaptive regulatory framework is critical to ensure that rules remain relevant and do not inadvertently penalize further innovation or the development of safety features within AI systems[3].

Collaborative Ecosystems and Inclusive Governance

Innovative approaches to governance are emerging alongside the growth of AI marketplaces, particularly in models that promote collaboration across society, government, and the market. An illustrative example comes from India's integrated AI ecosystem, inspired by the Samaj, Sarkar, Bazaar philosophy, which emphasizes the synergy between public institutions, private companies, and community stakeholders[4]. This model not only supports high-end technical development and quality assurance through public-private partnerships, but also ensures that the benefits of AI are distributed equitably, by aligning regulatory frameworks with societal needs and ethical standards[4]. In such ecosystems, the responsibility for model quality and safe deployment is shared, with government initiatives and academic research complementing private enterprise efforts to drive innovation in a responsible and inclusive manner[4].

Balancing Innovation, Risks, and Market Governance

The interplay between market-driven innovation and regulatory oversight is central to the future of AI model marketplaces. On one hand, these marketplaces enable rapid diffusion of technical expertise and drive economic benefits by making high-quality models readily available to a diverse user base[4]. On the other hand, there are inherent risks such as model misuse, potential legal ambiguities regarding copyright, and challenges in ensuring that trade practices do not lead to monopolization or reduced safety standards[2]. Regulatory frameworks must therefore be carefully calibrated to encourage experimentation and innovation while simultaneously imposing safeguards to mitigate negative social impacts, such as misinformation or discriminatory outcomes[3]. This balance requires ongoing dialogue among developers, policymakers, and the broader community to continuously redefine best practices in licensing, quality assurance, and ethical model use.

Conclusion

AI model marketplaces represent a transformative shift in how technology is developed, deployed, and governed, blending open innovation with targeted regulatory oversight. The specialized licensing frameworks, including Responsible AI Licenses and OpenRAIL, have emerged as essential components in governing the use and distribution of pretrained models, ensuring that both quality assurance and ethical use are maintained. At the same time, the evolving regulatory landscape highlights the need for agile policies that account for the uncertainties and rapid technological advancements inherent in AI. Efforts such as India's holistic approach to AI governance illustrate that collaborative, multi-stakeholder models can serve as effective blueprints for balancing innovation with societal responsibilities. As the digital bazaars of AI continue to grow, building robust governance mechanisms will be critical for maximizing the benefits while mitigating the associated risks.

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).