Recent Advances in Continual Learning: A 2026 Research Overview

Continual learning, also known as lifelong or incremental learning, addresses a fundamental limitation in modern artificial intelligence: the ability to acquire new knowledge and skills over time without erasing previously learned information[4]. This challenge, famously termed 'catastrophic forgetting', is a significant barrier to creating truly adaptive and sustainable AI systems, as retraining large models from scratch is both computationally expensive and inefficient[1][2][4]. Research in 2026 has seen significant progress in this area, moving beyond incremental improvements to propose new foundational paradigms and highly efficient adaptation techniques.

This report synthesizes key research findings from major 2026 machine learning conferences, such as NeurIPS and ICLR. It examines a groundbreaking new framework called Nested Learning and its proof-of-concept 'Hope' architecture, which re-imagines model optimization. It also explores practical, parameter-efficient methods like CoLoR, which leverages Low Rank Adaptation for continual learning in transformers. Finally, the report outlines the broader strategic directions guiding the application of continual learning to large-scale foundation models, including Continual Pre-Training, Continual Fine-Tuning, and the orchestration of multiple AI agents.

A New Paradigm: Nested Learning

A significant contribution from NeurIPS 2026 is the paper 'Nested Learning: The Illusion of Deep Learning Architectures'[1][10]. This work introduces Nested Learning (NL) as a new paradigm that moves beyond the conventional view of deep learning models. Instead of seeing a model as a single, continuous process, NL represents it as a system of nested, multi-level, and potentially parallel optimization problems, each with its own internal information flow and update frequency[1][5][10]. This neuro-inspired framework recasts learning as a hierarchical and dynamic process, suggesting that a model's architecture and its training algorithm are fundamentally different levels of the same optimization concept[1][11]. This approach aims to provide a path for models to continually learn, self-improve, and memorize more effectively[10].

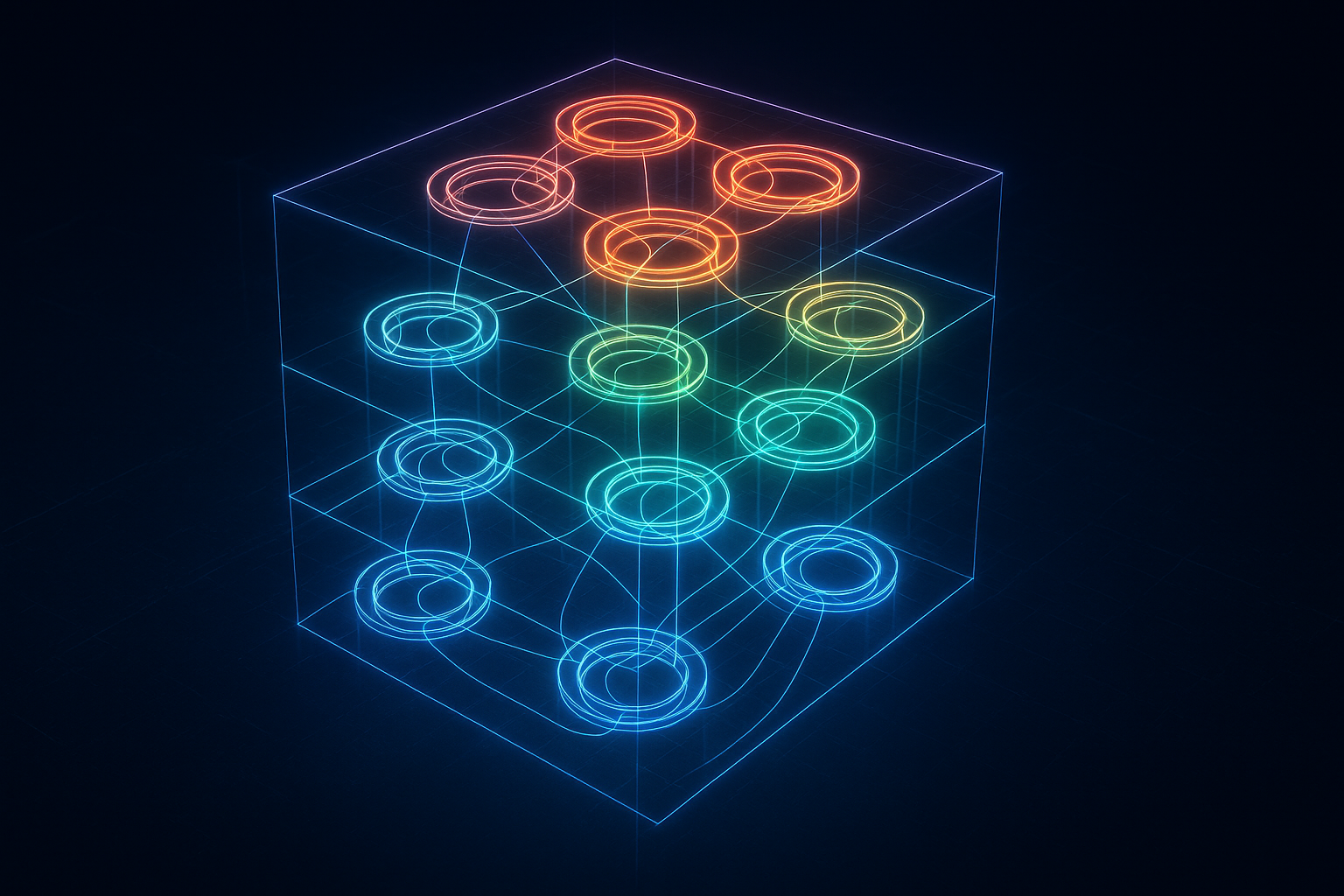

Conceptualizing the Nested Learning Paradigm

An abstract illustration of a neural network architecture based on the Nested Learning paradigm. Unlike traditional stacked layers, this model features interconnected, multi-level optimization loops, each glowing with a distinct color to signify different update rates and internal workflows. This visualizes the concept of a model as a system of simultaneous, nested learning processes.

Core Contributions of Nested Learning

The paper presents three core contributions to demonstrate the power of the NL framework[10]:

- Deep Optimizers: This concept reframes well-known gradient-based optimizers like Adam and SGD with Momentum. It posits that they are effectively associative memory modules that compress gradients, which motivates the design of more expressive optimizers with deeper memory structures[10].

- Self-Modifying Titans: The research introduces a novel sequence model that is capable of learning how to modify its own update algorithm, enabling a higher degree of adaptability[10].

- Continuum Memory System (CMS): NL proposes a new formulation for memory that generalizes the traditional binary view of 'short-term' and 'long-term' memory[10]. Instead, CMS treats memory as a spectrum of modules that update at different frequencies, allowing for more nuanced information retention[1][2].

The 'Hope' Architecture: A Proof-of-Concept

To validate these concepts, the researchers developed 'Hope', a self-modifying recurrent architecture based on the Titans architecture[1]. Hope integrates the Continuum Memory System blocks and can optimize its own memory through a self-referential process, allowing it to take advantage of unbounded levels of in-context learning[1][10]. In experiments, the Hope architecture demonstrated superior performance compared to models like Titans, Samba, and baseline Transformers. It achieved lower perplexity and higher accuracy on various language modeling and common-sense reasoning tasks[1]. Furthermore, it showed excellent memory management in long-context 'Needle-In-Haystack' tasks, showcasing its potential for continual learning applications[1][10].

Efficient Adaptation with Low Rank Adaptation (LoRA)

While foundational paradigms like Nested Learning push theoretical boundaries, another critical research thrust in 2026 focuses on practical and efficient methods for updating existing large models. Pre-trained transformers excel when fine-tuned on specific tasks, but they often struggle to retain this performance when data characteristics shift over time[12]. Addressing this, a paper presented at NeurIPS 2026 investigates the use of Low Rank Adaptation (LoRA) for continual learning[7][12].

The proposed method, named CoLoR, challenges the prevailing reliance on prompt-tuning-inspired methods for continual learning[12]. Instead, it applies LoRA to update a pre-trained transformer, enabling it to perform well on new data streams while retaining knowledge from previous training stages[12]. The key finding is that this LoRA-based solution achieves state-of-the-art performance across a range of domain-incremental learning benchmarks. Crucially, it accomplishes this while remaining as parameter-efficient as the prompt-tuning methods it seeks to improve upon[7][12].

Strategic Directions for Foundation Models

Beyond specific algorithms, the continual learning field in 2026 is increasingly focused on establishing strategic frameworks for the entire lifecycle of large-scale foundation models. Research highlights three key directions for enabling these models to evolve effectively over time[3].

- Continual Pre-Training (CPT): This involves incrementally updating a foundation model's core knowledge with new data after its initial, intensive pre-training phase[3]. The primary motivation for CPT is to keep models relevant and effective as new information emerges and data distributions shift, without incurring the prohibitive cost of complete retraining[3][4].

- Continual Fine-Tuning (CFT): CFT is the practice of applying a continuous stream of lightweight, task-specific updates to a model after it has been deployed[3]. This is essential for personalizing models to individual users or specializing them for specific domains. It also allows models to react quickly to domain drift, offering a lower-latency alternative to methods like retrieval-augmented generation (RAG)[3].

- Continual Compositionality & Orchestration (CCO): This forward-looking direction focuses on the dynamic integration of multiple, distinct AI agents over time to solve more complex, higher-level tasks[3]. Rather than adapting a single monolithic network, CCO employs a modular approach where different compositions of models can be orchestrated to adapt to non-stationary environments[3].

The Evolving Research Landscape: Constraints and Future Focus

Analysis of papers from top machine learning conferences in recent years, including ICLR 2026, reveals important trends in the field's priorities[4][9]. A dominant theme is the focus on learning under resource constraints, particularly limited memory. Most research explicitly constrains the amount of past data that can be stored for replay or reference[4][8]. This reflects a drive towards practical applications where storing all historical data is not feasible.

In contrast, the computational cost of continual learning has been a less-explored area. A survey noted that over half of the analyzed papers made no mention of computational costs at all[4]. However, this is changing. The community increasingly recognizes the need to balance performance with practical deployment issues, and future research is expected to push for strategies that operate under tight compute budgets, both with and without memory constraints[4]. This is particularly relevant for on-device learning and the efficient adaptation of large models[8].

Another promising avenue is the advancement of test-time training approaches. Methods discussed in relation to architectures like Titans and in papers on End-to-End Test-Time Training reformulate the model's memory unit. In this setup, the memory is updated at test time using gradient descent, allowing the model to capture long-term dependencies and continuously improve its predictions on the fly[6]. This represents another viable path toward achieving true continual learning in modern AI systems.

Conclusion

The continual learning research landscape in 2026 is characterized by a dynamic interplay between foundational innovation and pragmatic application. On one hand, paradigms like Nested Learning are challenging the core assumptions of deep learning architecture and optimization, paving the way for self-modifying models with more sophisticated memory systems. On the other hand, methods like CoLoR demonstrate a commitment to resource efficiency, enabling large pre-trained models to adapt continually without excessive computational or parameter overhead.

Looking forward, the strategic frameworks of Continual Pre-Training, Fine-Tuning, and Orchestration will likely become standard practice for managing the lifecycle of foundation models. As the field matures, the focus is broadening from simply overcoming catastrophic forgetting to developing robust, efficient, and scalable learning systems that can truly evolve with new data and changing environments. The growing emphasis on computational constraints signals a critical step towards deploying these advanced continual learning capabilities in real-world, resource-limited scenarios.

References

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).