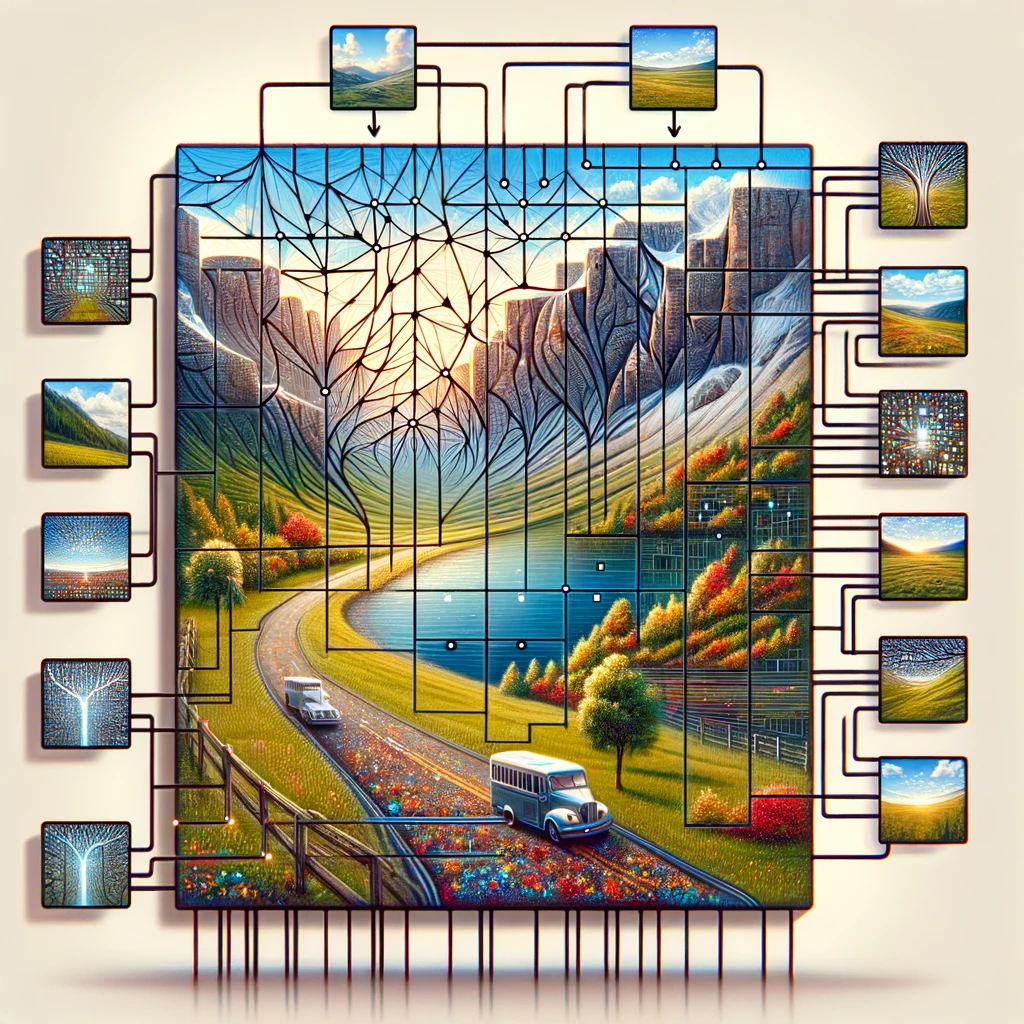

Vision Transformers (ViTs) enhance image recognition by applying transformer architecture, initially designed for natural language processing, to visual data. They process images by dividing them into patches, treating these patches as tokens, allowing the model to leverage self-attention to capture global contextual information rather than localized features used by convolutional neural networks (CNNs). This method resulted in significant performance improvements on major benchmarks like ImageNet, achieving over 88% accuracy with considerably lower computational resources compared to CNNs[1][2][5].

ViTs utilize embeddings and position embeddings to retain spatial information, enabling them to learn intricate patterns within images. By focusing on the entire image context through self-attention, ViTs provide a more interpretable framework for image classification tasks, demonstrating superior performance, especially with extensive training data[3][4][6].

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).