Overview

The integration of wet lab automation with cloud-based machine learning creates a seamless environment where physical experiments and computational feedback loops work together to accelerate drug discovery and scientific research[1][2]. This report outlines the hardware stack used for lab automation, the role of laboratory information management systems (LIMS) in data integration, and the orchestration tools that coordinate these complex workflows, all while providing a conceptual workflow diagram and cost analyses.

Hardware and Automation Platforms

Modern wet labs incorporate advanced robotic systems and closed-loop automation platforms that execute experiments across various modalities such as biochemistry, biophysics, and cellular biology[1]. For instance, the integration of liquid handlers, robotic workcells, and custom assay cascades allows for ultra-rapid and precise data generation using hundreds of times more data points per assay compared to industry norms[1]. In addition, platforms like LINQ provide a node-based workflow canvas and Python SDK that permit the design, simulation, and live execution of experimental protocols, making it simple for scientists to customize and integrate their solutions without extensive programming knowledge[6].

Lab Information Management Systems (LIMS) and Data Integration

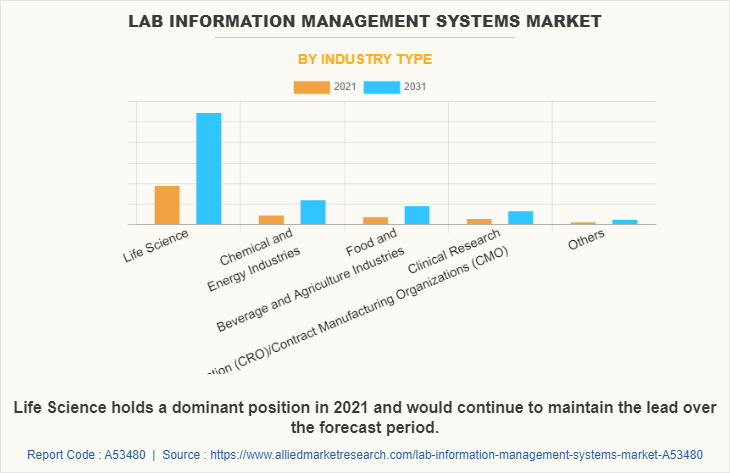

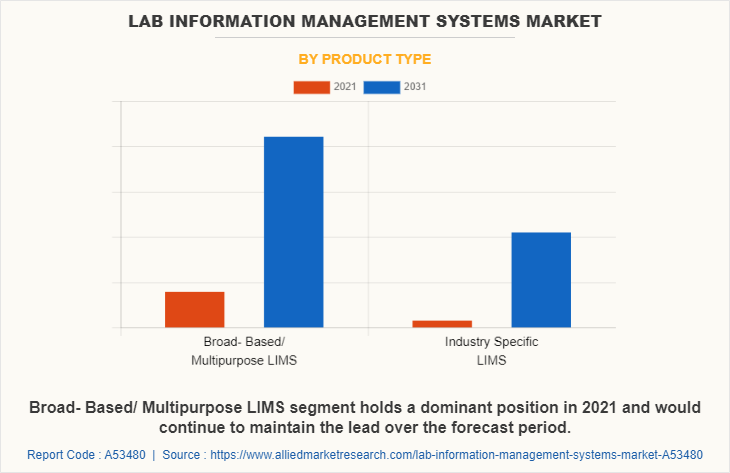

LIMS solutions are critical to managing laboratory data, instruments, and workflows. They create a centralized repository for experimental data and metadata, ensuring that information is accessible to every team member and that procedures are compliant and traceable[2]. Companies like Thermo Fisher provide LIMS platforms that are purpose-built to support various laboratory functions such as sample tracking, workflow automation, and real-time data management in environments ranging from research and development to manufacturing and bioanalysis[3]. This centralized approach ensures that the laboratory's experimental data is FAIR—findable, accessible, interoperable, and reusable—supporting further computational analysis in the cloud.

Pipeline Orchestration Tools

Pipeline orchestration tools are essential for automating, coordinating, and managing the multiple stages of machine learning projects, from preprocessing data and feature engineering to model training and deployment. These tools help establish efficient workflows that are reproducible, scalable, and robust by allowing parallel execution, resource management, and error handling[4]. Various platforms such as Apache Airflow, Prefect, Dagster, and Kubeflow have emerged as leaders in this space. They enable data scientists and ML engineers to design workflows as directed acyclic graphs (DAGs) and to integrate continuous integration/continuous deployment practices in ML pipelines[7]. These orchestration tools serve as the computational counterpart to physical experiment automation, ensuring that high-quality experimental data is seamlessly fed into machine learning models and that feedback loops are maintained for continuous improvement.

Workflow Diagrams and Cost Analyses

A typical integrated workflow starts with data capture at the bench using automated instruments, followed by execution of experiments controlled by robotic systems. The data is then annotated and stored within a system of record such as an ELN or LIMS. This annotated data is fed into design-of-experiment software to structure and standardize protocols and then passed onto orchestration tools that manage the machine learning pipeline on cloud infrastructure. Finally, the computational feedback, including predictive models and analysis, informs subsequent experimental design and adjustments in the physical workflow. This cycle is visualized in a workflow diagram where sensors, robotic systems, LIMS, orchestration engines, and cloud computing modules are interconnected in a continuous loop of data capture, analysis, and iterative experimentation[2].

Cost analyses conducted on laboratory information systems have shown that while the initial capital expenditure for setting up a full laboratory automation and communication system is high, the cost per test can be reduced dramatically. In one study, the cost per test for full-service capability ranged between 13 and 17 cents, and when viewed on a per patient day basis, expenses ranged between 59 and 85 cents[5]. These efficiencies help justify the initial investments by reducing recurring costs over time and improving throughput.

Conclusion

Integrating wet lab automation with cloud-based machine learning is redefining the way scientific experiments are conducted and analyzed. By utilizing sophisticated hardware platforms, robust LIMS solutions, and advanced pipeline orchestration tools, laboratories can bridge the gap between physical experimentation and digital analysis. The resultant feedback loops foster an environment where rapid, data-driven decisions accelerate development cycles, improve success rates, and ultimately lead to significant cost savings. This unified approach not only streamlines the design-make-test-analyze cycle but also paves the way for more predictive and adaptive experimental strategies, ensuring that laboratories are well-equipped to meet the demands of modern research and therapeutic development.

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

.jpg)