Understanding Soft Capping in Large Language Models

Introduction

Soft capping in Large Language Models (LLMs) is a crucial technique that encompasses various aspects to enhance the performance and usability of these sophisticated models. When considering soft capping in LLMs, it is essential to understand the nuanced strategies and applications that are utilized to optimize the functionality and outputs of these models.

Controlling Model Outputs

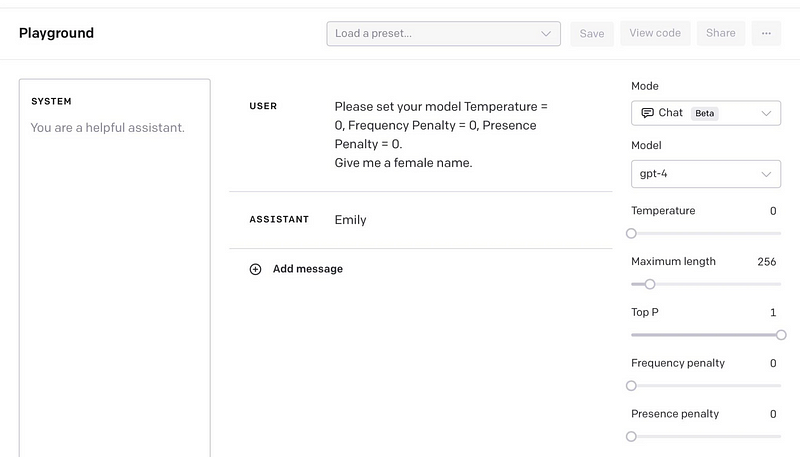

Soft capping in LLMs involves adjusting parameters such as temperature or penalty to control the frequency of token usage by the model[4]. By implementing soft capping, the model's creativity and confidence levels can be regulated, enabling a more controlled and nuanced output[2]. This approach ensures that the model's responses remain balanced, accurate, and context-specific based on the requirements of different tasks.

Managing Attention Mechanism

Within the realm of LLMs, soft capping is also associated with managing the attention mechanism through features like Gemma 2, which includes aspects like sliding window attention and knowledge distillation[1]. Through soft capping, attention is directed towards preventing the model from being overly confident or extreme in its predictions, thus fostering a more reliable output[2].

Optimizing Language Models

Another perspective on soft capping in LLMs involves optimizing the models by integrating AI-generated soft prompts to guide task-specific context understanding without extensive fine-tuning[5]. These prompts, in the form of numerical embeddings, assist the model in generating accurate outputs for specialized tasks, leading to an efficient enhancement of LLM performance without the need for substantial additional data[5].

Training Efficiency

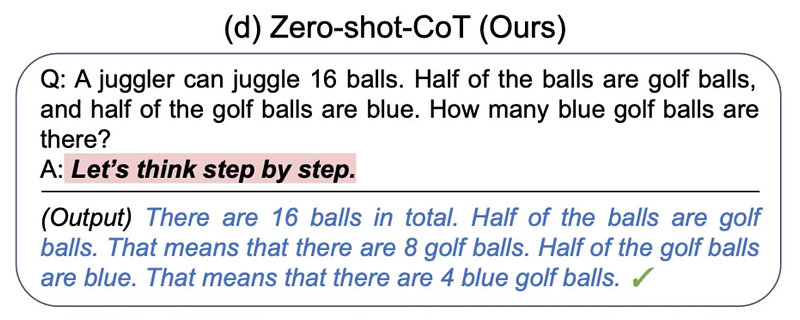

Soft capping plays a significant role in the training efficiency of LLMs by enabling models like GPT-3 to learn without explicitly labeled data[3]. By training these models to predict the next word in text passages, hand-labeled data can be avoided, facilitating the development of high-level reasoning capabilities[3]. This training methodology allows LLMs to perform complex tasks and improve their language abilities over time[3].

Real-time Data Interaction

Soft capping is instrumental in enhancing LLM capabilities for real-time data interaction using techniques like retrieval-augmented generation (RAG)[7]. This approach involves enriching prompts with context before sending them to the generator, enabling LLMs to access external systems and APIs autonomously. By integrating function-calling capabilities, LLMs can efficiently interface with external tools and services, thereby enhancing their task performance and versatility[7].

Conclusion

Soft capping in LLMs encompasses diverse strategies focused on controlling model outputs, managing attention mechanisms, optimizing language models, improving training efficiency, and enabling real-time data interaction. By understanding and leveraging these aspects of soft capping, developers and researchers can enhance the functionality and adaptability of Large Language Models for various applications and use cases.

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).