Overview and Research Motivation

The paper titled "The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity" investigates how recent generations of Large Reasoning Models (LRMs) behave when they generate chain-of-thought reasoning traces before providing final answers. The study focuses on understanding the capabilities and limitations of these models, especially when they are tasked with problems that require sequential reasoning and planning. The authors raise questions about whether these models are truly engaging in generalizable reasoning, or if they are simply executing a form of pattern matching, as suggested by the observations from established mathematical and coding benchmarks[1].

Experimental Setup and Controlled Puzzle Environments

To thoroughly analyze the reasoning behavior of LRMs, the researchers designed a controlled experimental testbed based on a series of algorithmic puzzles. These puzzles include well-known planning challenges such as the Tower of Hanoi, Checker Jumping, River Crossing, and Blocks World. Each of these puzzles allows for precise manipulation of problem complexity while preserving a consistent logical structure. For example, the Tower of Hanoi puzzle is used to test sequential planning as its difficulty scales exponentially with the number of disks, while Checker Jumping requires adherence to strict movement rules to swap red and blue checkers. The controlled environments help in examining not only the final answer accuracy but also the complete reasoning process, including intermediate solution paths, correctness verification, and how these models use token budgets during inference[1].

Key Findings and Performance Regimes

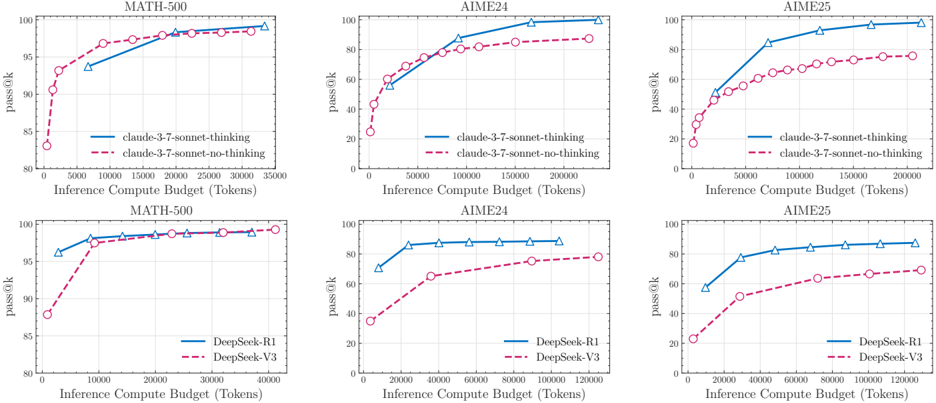

A major insight from the study is the identification of three distinct performance regimes as problem complexity increases. In the first regime with low complexity, standard models that do not produce explicit reasoning traces can sometimes outperform LRMs. As the complexity reaches moderate levels, models with chain-of-thought generation begin to show a distinct advantage, as their thinking process helps to navigate more intricate puzzle constraints. However, in the third regime characterized by high problem complexity, both thinking and non-thinking models experience a complete collapse in accuracy. The experiments revealed that beyond a certain threshold, the reasoning performance of LRMs falls to zero despite having ample token budgets. An interesting phenomenon observed is that as problems become more complex, the models initially increase their reasoning tokens, but then counterintuitively reduce them when faced with extreme difficulty. This decline in reasoning effort is accompanied by inconsistent reasoning and a failure to maintain the appropriate computational steps throughout the solution process[1].

Analysis of Intermediate Reasoning Traces

The paper places significant emphasis on inspecting the intermediate reasoning traces produced by the models. By extracting the chain-of-thought, the study examines where correct and incorrect intermediate solutions occur and how these affect the overall problem-solving process. In simpler problems, correct solutions are identified early in the reasoning process; however, the model tends to overthink by exploring redundant paths, which can lead to inefficiencies. In contrast, with moderate complexity tasks, models begin by generating several incorrect solutions before eventually arriving at a correct answer. Notably, in very complex problems, no correct moves are generated at any point, leading to a complete breakdown in reasoning. This detailed analysis provides evidence of the models’ limited self-correction capabilities and highlights fundamental scaling issues in inference compute allocation as problem complexity increases[1].

Exact Computation and Algorithm Execution

Another significant observation made in the paper is the models’ difficulty with exact computation and following prescribed algorithmic steps. For instance, even when the researchers provided the models with a complete recursive algorithm for the Tower of Hanoi puzzle, there was no notable improvement in performance. The models still exhibited the same collapse at a certain level of complexity, indicating that the failure was not due solely to the challenge of finding a solution from scratch but also due to a more systemic limitation in performing strict, logical step-by-step execution. This inability to capitalize on provided algorithmic guidance underscores the gap between human-like logical reasoning and the pattern-based reasoning exhibited by current LRMs[1].

Limitations and Implications for Future Research

The study makes it clear that although LRMs have shown promising results on a variety of reasoning benchmarks, they still face severe limitations. The performance collapse at high complexity levels, the counterintuitive reduction in reasoning tokens despite increased problem difficulty, and the inability to reliably perform exact computations suggest that fundamental improvements are needed. The paper questions the current evaluation paradigms that focus primarily on final answer accuracy and advocates for metrics that assess intermediate reasoning quality. By using puzzle-based environments that allow precise manipulation of complexity and clear rule definitions, the research provides quantitative insights into where and why LRMs fail. These insights are crucial for guiding future improvements in model architecture and training methodologies, paving the way for the development of models with more robust and generalizable reasoning capabilities[1].

Conclusion

In summary, the paper provides a comprehensive examination of the capabilities and limitations of Large Reasoning Models through controlled experimentation with algorithmic puzzles. Crucial findings include the identification of three complexity regimes, detailed analysis of intermediate reasoning traces, and a demonstration of the models’ difficulties with exact computation and following explicit algorithmic steps. The research highlights that while chain-of-thought generation can enhance performance at moderate complexity, current LRMs ultimately fail to exhibit generalizable reasoning for highly complex tasks. These findings raise important questions about the true nature of reasoning in these systems and suggest that further research is needed to overcome the observed scaling and verification limitations[1].

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).