Introduction to Neural Architecture Search

Neural Architecture Search (NAS) has emerged as a transformative approach in the design of artificial intelligence (AI) systems. By automating the process of designing neural network architectures, NAS has made significant impacts across various applications, enhancing the overall efficiency and effectiveness of deep learning implementations.

Transition from Manual to Automated Design

Historically, designing neural network architectures was a manual process heavily reliant on expert knowledge. This approach was not only time-consuming but also limited in scope, resulting in architectures that could overlook potential innovative solutions. The evolution towards automated design has fundamentally altered this landscape. As noted in recent literature, NAS harnesses algorithms capable of exploring vast architectural spaces, identifying optimal configurations often overlooked in manual processes. This shift reflects a broader trend in AI, moving toward systems that learn and adapt autonomously, thereby reducing reliance on human input[1].

The transition from expert-driven design to automated techniques is exemplified by the development of various search methodologies. Initially, early NAS methods utilized approaches such as reinforcement learning and evolutionary algorithms, which, while innovative, faced challenges related to computational demands[1]. As NAS matured, more efficient methodologies emerged—such as Differentiable Architecture Search (DARTS) and hardware-aware NAS (HW-NAS)—which are better suited for modern applications, especially those that demand high performance in constrained environments, like edge devices[3].

Enhancing Efficiency and Performance through HW-NAS

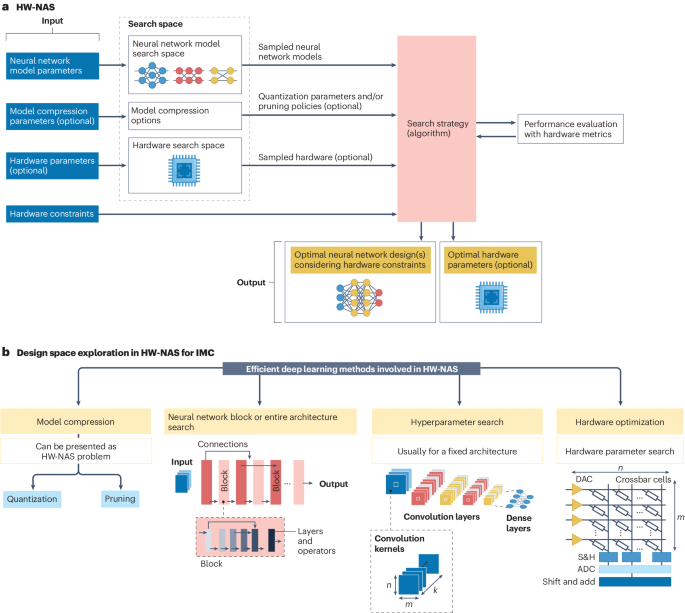

HW-NAS specifically addresses the complexities associated with integrating hardware constraints into neural architecture optimization. It expands conventional NAS by considering critical hardware parameters, such as energy efficiency and operational speed, which are essential for in-memory computing (IMC) technologies. The integration of NAS with hardware parameters facilitates the design of streamlined neural networks that are optimized for efficient deployment on targeted hardware[3].

For example, as AI applications become increasingly prevalent in resource-constrained environments, HW-NAS plays a crucial role in optimizing both the architecture of neural networks and the corresponding hardware design. It enables co-optimization of models and hardware architecture, thus maximizing performance while minimizing the energy footprint. Importantly, HW-NAS incorporates specific hardware-related details into the search space, such as crossbar size and precision of analog-to-digital converters, enhancing the alignment between neural architecture and its operational context[3].

Democratizing Access to Advanced AI Models

The advancements brought by NAS technology have democratized access to sophisticated AI models. By automating architecture discovery, NAS lowers the barrier for developers and researchers who may lack the extensive domain knowledge previously required to craft high-performance neural networks. As HW-NAS techniques evolve, they further enable non-experts to utilize cutting-edge neural architectures optimized for specific applications, such as mobile health monitoring or real-time image processing.

The implications of this democratization are profound. They promise to accelerate innovation within fields such as medical imaging, where the performance of deep learning models can significantly improve patient outcomes[1], as well as in natural language processing (NLP), where optimized architectures can expand the capabilities of language understanding models. The seamless integration of these components through automated techniques is reshaping how industries approach AI deployment.

Addressing Challenges and Future Directions

Despite the advancements that NAS offers, several challenges remain, particularly related to scalability and robustness. Current challenges include the lack of a unified framework that effectively combines diverse neural network models and various IMC architectures. Many frameworks primarily target convolutional neural networks, potentially sidelining other important architectures, such as transformers which have gained traction in recent years[3].

Moreover, a comprehensive benchmarking framework for HW-NAS that encompasses various neural architectures and the associated hardware is still a work in progress. Developing such benchmarks is essential for ensuring reproducibility and fair comparisons of different NAS approaches, which can, in turn, accelerate research in this field[3].

Future developments could focus on refining automatable workflows that tackle not just neural architecture but also the entire training and deployment process of models. By advancing towards fully automated solutions with minimal human intervention, we can unlock new possibilities in creating bespoke AI solutions for complex challenges[3].

Conclusion

In summary, the advent of Neural Architecture Search, particularly in its hardware-aware form, is fundamentally altering AI design landscapes. By automating and optimizing the processes involved in neural network architecture design, NAS allows for the development of more efficient, scalable, and accessible AI solutions. As research continues to address existing challenges and explore new methodologies, the potential for NAS to revolutionize AI design and deployment remains robust, promising significant advancements across a range of applications.

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).