Overview of Brain-to-Text Technology

Recent advancements in neuroprosthetics are enabling communication for individuals who have lost their ability to speak or write. The study introduces a new non-invasive method called Brain2Qwerty, which aims to decode sentences directly from brain activity associated with typing. This process primarily utilizes data from electroencephalography (EEG) and magnetoencephalography (MEG) to interpret typed text based on sentences presented to participants.

The Brain2Qwerty Approach

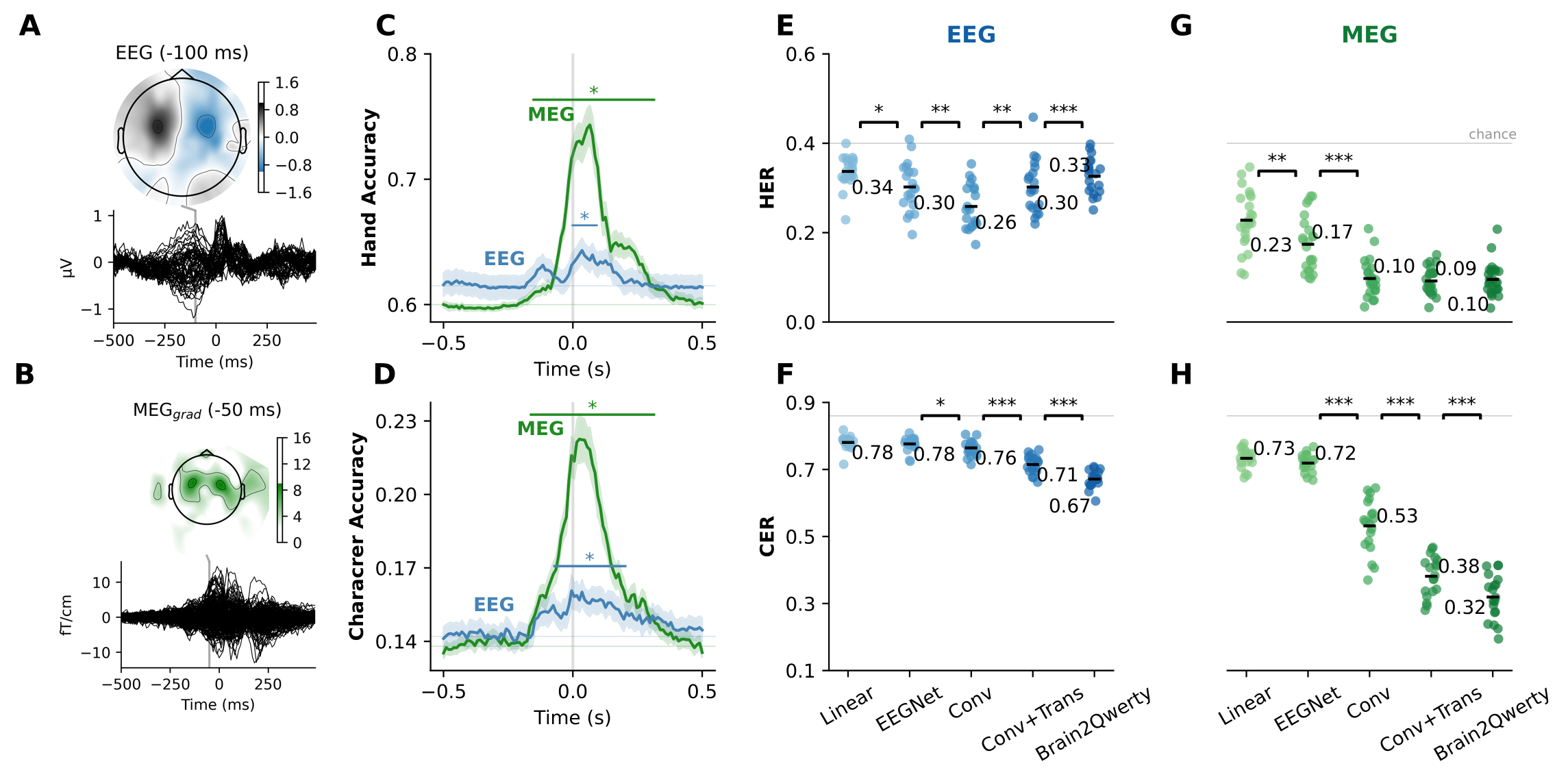

Brain2Qwerty involves a deep learning architecture that decodes sentences from brain activity generated while participants type sentences on a QWERTY keyboard. During the study, volunteers typed a total of 128 sentences under specific conditions, capturing both EEG and MEG signals. Each participant was prompted to type sentences they heard displayed one word at a time on a screen, using a system that divided the typographic workflow into three main stages: read, wait, and type. The overall character-error-rate (CER) achieved was 32.0±6.6% with MEG signals, with top performers reaching a CER as low as 19%.

Comparative Performance of Brain2Qwerty

The performance of Brain2Qwerty significantly surpasses traditional brain-computer interfaces (BCIs), with the study showcasing a character error rate that considerably closes the gap between invasive and non-invasive methods. The results demonstrate a preference for MEG over EEG, indicated by a character error rate improvement in various conditions. The paper emphasizes the potential of this approach to decode a variety of sentences with different types beyond the training set, highlighting its versatility.

Impact of Typing Errors and Language Model Integration

In analyzing typing errors, it was determined that 3.9% of keystrokes resulted in mistakes. The study further engaged in error analysis by examining the impact of character frequency on decoding accuracy, discovering that frequent words were more easily decoded than rare ones. The use of a language model within Brain2Qwerty improved the character error rate by incorporating linguistic statistical regularities, leading to additional accuracy improvements as the model was trained with further data.

Future Implications of Non-Invasive Neuroprosthetics

The implications of these findings suggest that non-invasive BCIs can become a reliable method for restoring communication for individuals with severe motor impairments. Furthermore, the scaling of non-invasive techniques could potentially lead to greater accessibility compared to invasive options, which require surgical implants. The study underscores ongoing efforts to refine these technologies, aiming for real-time decoding capabilities that remain non-invasive.

Conclusions and Next Steps in Brain-Computer Interfaces

The results of the study highlight significant strides towards usable brain-computer interfaces capable of decoding language from neural recordings. As researchers continue to investigate the interplay between brain signals and typing behavior, it is expected that enhanced models will facilitate smoother communication for individuals unable to engage in conventional modes of expression. Future research is anticipated to explore expanding the vocabulary and adaptability of these systems in practical settings.

Get more accurate answers with Super Pandi, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).